We all care about cash, time, life, love, and if you’re doing computer graphics, you might care about the memory consumption of your graphics card. Why ? For the same reason when you running out of cash ![]()

I’ll explain why does it matter to compress texture, and compare available possibilities. My personnal goal is to be able to load a lot of FULL HD pictures on tablet, for a museum project. The analysis is focused on DXT1 compression and size. I’m looking forward to ETC1 and will update that blog post with the result in the future.

What are we dealing with

If you are doing an application that display lot of hd pictures, that’s matter. We’ll start from this simple math statement: a full HD picture is 1980×1020 with 4 channels (RGBA). Whatever if your pictures is in PNG, or JPEG, your graphics card is not able to understand it, and will store it in its memory decompressed. So this image will eat:

1920 x 1080 x 4 = 8294400 bytes = 7.91MB

1920 x 1080 x 4 + mipmaps = 10769252 bytes = 10.27MB

In theory. Because it might be more if your graphics card doesn’t support NPOT texture. If not, usually, the texture will be resized to the closest POT texture available, mean for us: 2048 x 2048. Then the size for POT will be:

2048 x 2048 x 4 = 16777216 bytes = 16MB

2048 x 2048 x 4 + mipmaps = 22369620 bytes = 21MB

Compressions types

They are plenty types of compression availables. The most common are S3TC (including DXT1, DXT3, DXT5) from Savage3 originally, LATC from Nvidia, PVRTC from PowerVR, ETC1 from Ericsson…

Not all of them are available everywhere, and it’s depending a lot from your hardware. Here is a list of tablet / vendor / texture compression available. (only tablet, not desktop computer.) Thanks to this stackoverflow thread about supported OpenGL ES 2.0 extensions on Android devices

| Tablette | Vendor | DXT1 | S3TC | LATC | PVRTC | ETC1 | 3DC | ATC |

|---|---|---|---|---|---|---|---|---|

| (desktop computer) GeForce GTX 560 | NVIDIA | X | X | X | ||||

| Motorola Xoom | NVIDIA | X | X | X | X | |||

| Nexus One | Qualcom | X | X | X | ||||

| Toshiba Folio | NVIDIA | X | X | X | X | |||

| LGE Tablet | NVIDIA | X | X | X | X | |||

| Galaxy Tab | PowerVR | X | X | |||||

| Acer Stream | Qualcomm | X | X | X | ||||

| Desire Z | Qualcomm | X | X | X | ||||

| Spica | Samsumg | X | X | |||||

| HTC Desire | Qualcomm | X | X | X | ||||

| VegaTab | NVIDIA | X | X | X | X | |||

| Nexus S | PowerVR | X | X | |||||

| HTC Desire HD | Qualcomm | X | X | X | ||||

| HTC Legend | Qualcomm | X | X | X | ||||

| Samsung Corby | Qualcomm | X | X | X | ||||

| Droid 2 | PowerVR | X | X | |||||

| Galaxy S | PowerVR | X | X | |||||

| Milestone | PowerVR | X | X |

We are seeing that ETC1 is standard compression for OpenGL ES 2, unfortunately, it will not work on desktop.

PVRTC is specific to PowerVR device: it’s a standard on Ipad/Iphone.

Using DXT1

If you use DXT1, you need a POT image. DXT1 doesn’t work on NPOT.

To convert any image to DXT1 without any tool, you must know that your graphics card is capable of doing it, using specials opengl functions. But i wanted to precalculate them.

Nvidia texture tools contains tools for converting them, but you need an Nvidia card. For all others, you might want to look at Libsquish. It’s designed to compress in software a raw image to DXTn.

The result will be not a DXT1 “file”, because DXT1 is the compression format. The result will be stored in a DDS file, that we’ll see later about it.

If you want to be able to use libssquish in Python, you might want to apply my patch available on their issue tracker

For DXT1, the size of the file is not depending of the image content:

DXT1 2048x2048 RGBA = 2097152 bytes = 2MB

That’s already a big improvement. DXTn is able to store mipmaps of the texture too. For this size, the calculation is:

DXT1 2048x2048 RGBA + mipmap = 2795520 bytes = 2.73MB

Comparaison table

| Type | Resolution | File size | GPU size | Images in a 256MB GPU | Images in a 512MB GPU |

|---|---|---|---|---|---|

| Raw RGBA image (POT) | 2048 x 2048 | - | 16384KB | 16 | 32 |

| PNG image (NPOT) | 1920 x 1080 | 4373KB | 8040KB | 32 | 65 |

| PNG Image in reduced POT resolution | 1024 x 1024 | 1268KB | 4096KB | 64 | 128 |

| DXT1 without mipmap | 2048 x 2048 | 2048KB | 2048KB | 128 | 256 |

| DXT1 without mipmap, reduced | 1024 x 1024 | 512KB | 512KB | 512 | 1024 |

| DXT1 with mipmap | 2048 x 2048 | 2730KB | 2730KB | 96 | 192 |

| DXT1 with mipmap, reduced | 1024 x 1024 | 682KB | 682KB | 384 | 768 |

As soon as we use compression, what we see is:

- The file size is the same as GPU size

- Even with POT texture compared to NPOT dxt1, we can still store 4x more images in GPU

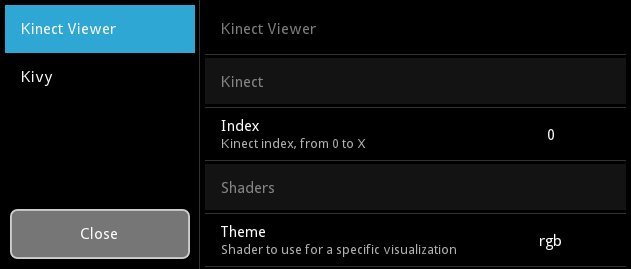

And with Kivy ?

DXT1 itself is the compression format, but you cannot actually use it like that. You need to store the result is a formatted file. DDS.

Kivy is already able to read DDS files. But you must ensure that your graphics card is supporting DXT1 or S3TC. Look at gl_has_capability() function in Kivy then.