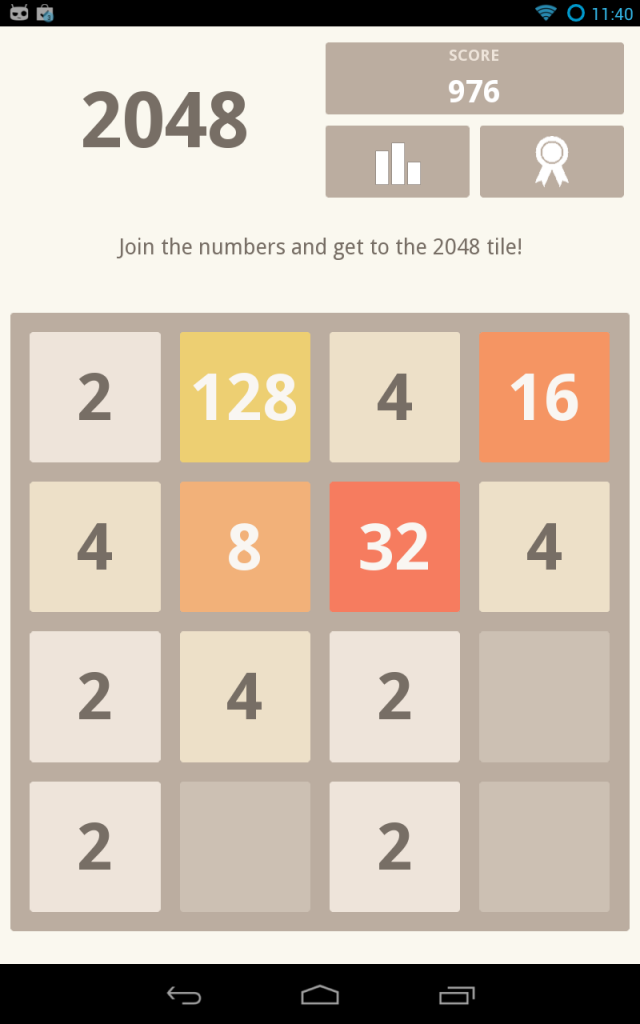

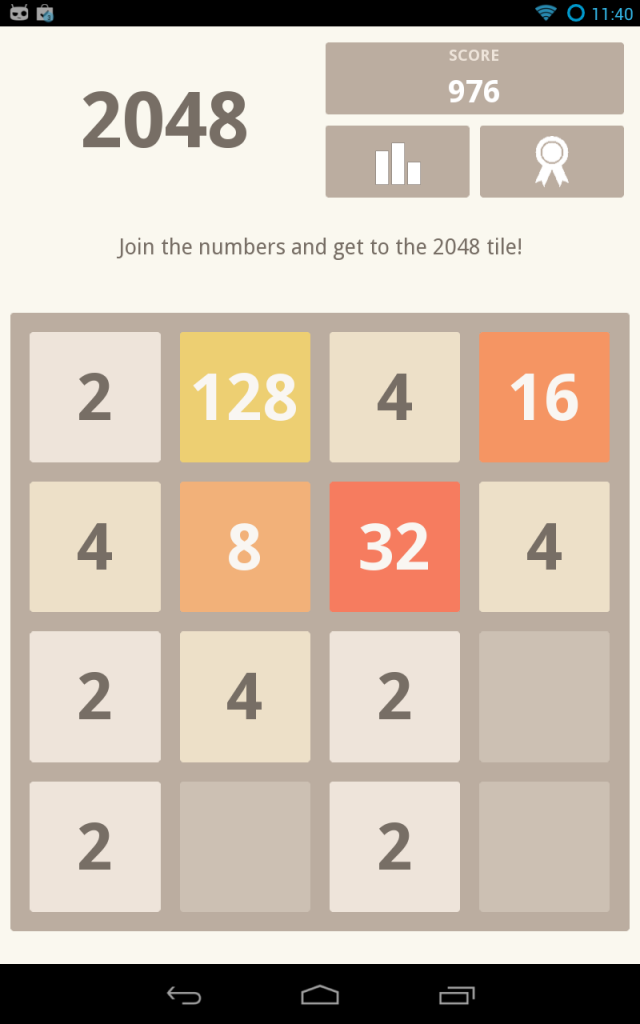

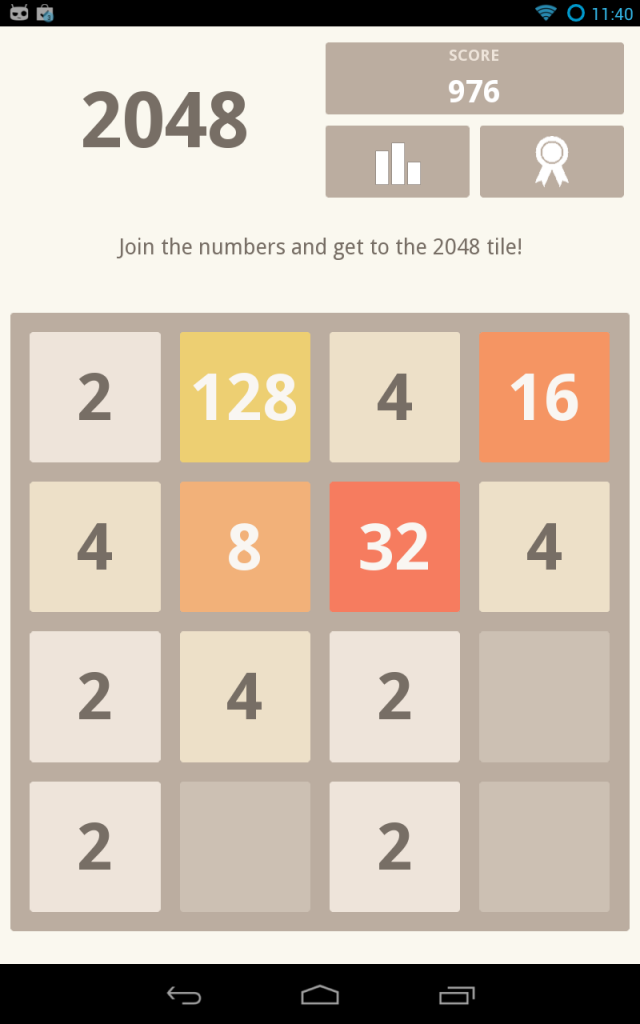

If you don’t know the 2048 puzzle yet, you should. But be careful, it’s time consuming. I won’t go on the whole clone history of the game, but will just say that if you want to play it on the Web, play to the Gabriel Cirulli 2048. The author said it wont make an official iOS/Android app, so… let’s give Kivy a try

Only 2 hours of coding where needed for the first published version of the game. Then i learned on the Google Play Game services APIs for integrating a leaderboard and achievements. And after a couple of hours during the night, what i can say is: Be patient! Even if you follow the documentation carefully, you need to wait few hours before your app_id works on their server, even in test mode. There never warn us about it.

When the setup is done and it start working, it is easy to login:

PythonActivity = autoclass('org.renpy.android.PythonActivity')

GameHelper = autoclass('com.google.example.games.basegameutils.GameHelper')

gh_instance = GameHelper(PythonActivity.mActivity, GameHelper.CLIENT_ALL)

gh_instance_listener = GameHelperListener()

gh_instance.setup(gh_instance_listener)

gh_instance.onStart(PythonActivity.mActivity)

android.activity.unbind(on_activity_result=_on_activity_result)

android.activity.bind(on_activity_result=_on_activity_result)

That’s how you can unlock achievement:

# uid is the Google UID for the achievement you want

if gh_instance.isSignedIn():

Games.Achievements.unlock(gh_instance.getApiClient(), uid)

And put the user score on the leaderboard:

# uid is the Google UID for the leaderboard you've created.

# You can have multiple leaderboard.

if gh_instance.isSignedIn():

Games.Leaderboards.submitScore(gh_instance.getApiClient(), uid, score)

There is a little more code around that, but globally, using the new Play Games services APIs is now easy. I hope this little piece of code will help peoples to integrate it into their app. Somebody need to start a Python library for managing Google Play API and Game Center for iOS.

Have a look at the source code for an example of the integration with the Play Games services APIs